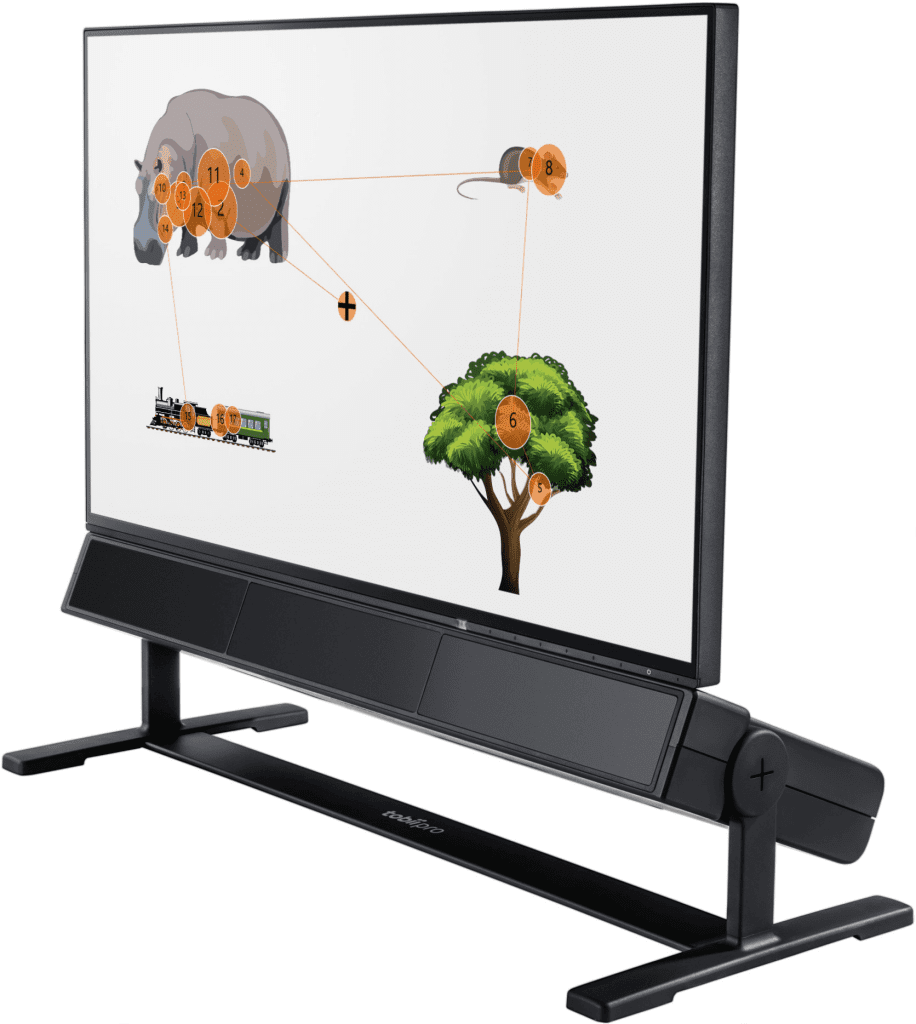

On November 15, 2023, Tobii updated our one-stop eye-tracking scientific research software platform, Tobii Pro Lab.In the latest version 1.232, we have updated a brand new project type:Advanced Screen Project(Advanced Screen-based Project, ASP). In ASP, we have changed the inherent methods and patterns of organizing experimental designs that were previously used in Screen Project. Making it morePlus for trial-based experimental designs where multiple stimuli are presented simultaneously.

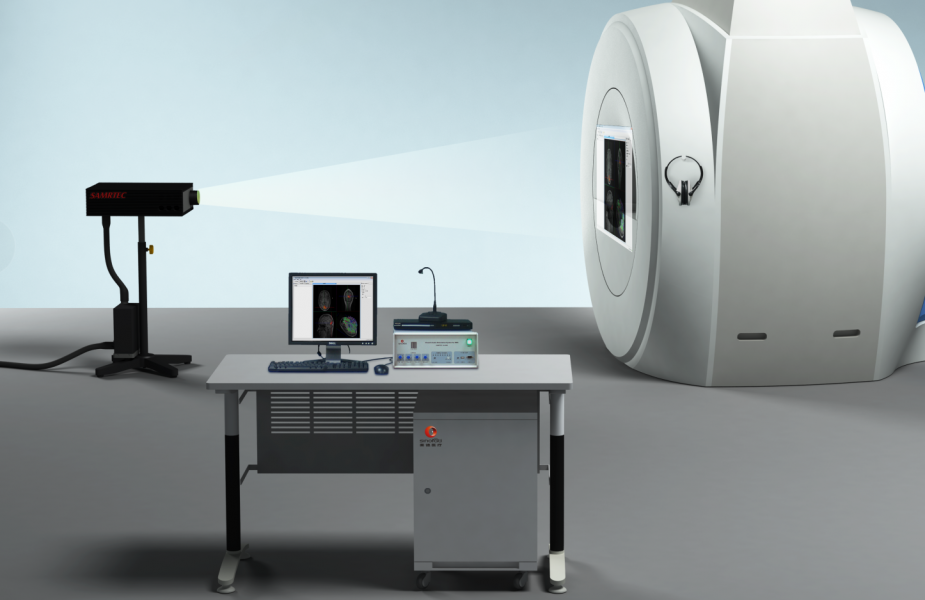

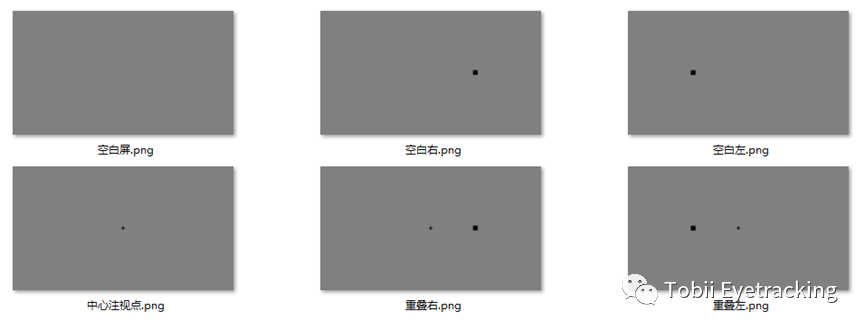

In Tobii Pro Lab, traditional screen-based projects require the researcher to integrate multiple stimulus images presented simultaneously in a trial into the same canvas. For example, in the blank-overlap paradigm, the study requires that a central stimulus and a peripheral stimulus be presented to the subject in different orders and orientations. This requires the researcher to combine all the trial sessions in advance through the drawing software (as shown below).

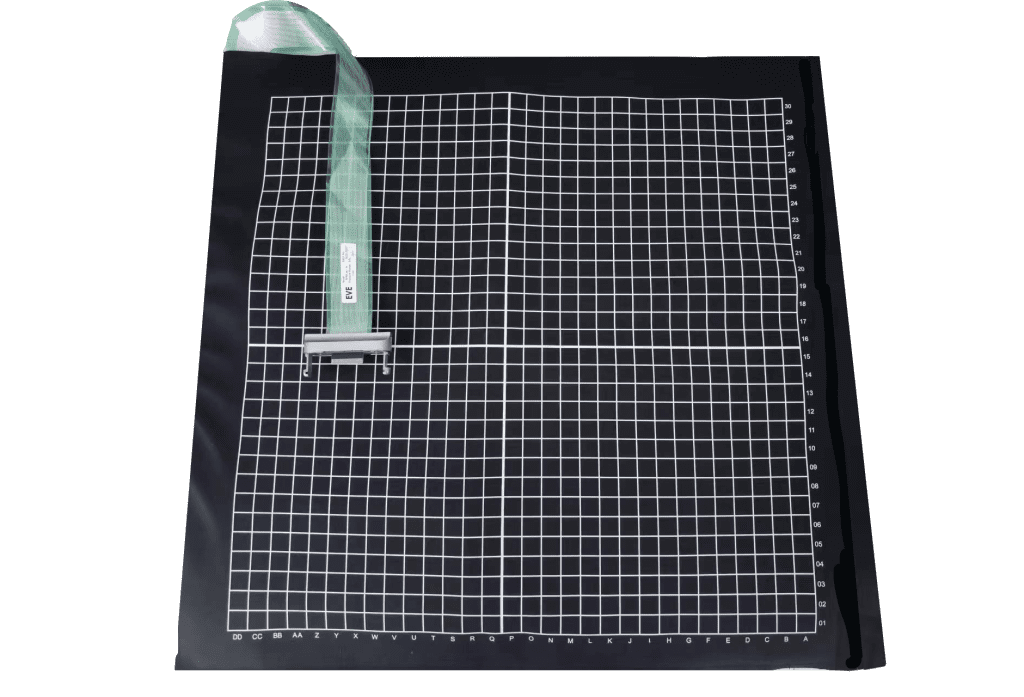

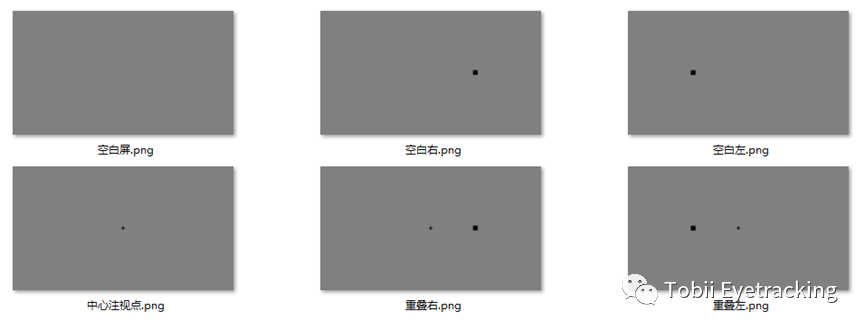

It is important to note that the blank overlap paradigm is just an atypical example; if a researcher were to use a dot-probe paradigm to explore attentional bias, there would be multiple combinations of sides of the screen and a balance of presentation positions for stimuli with different properties. This leads to the likelihood that the researcher will need to make dozens or even hundreds of trial pictures. In some cases, the researcher may even need to form a matrix of multiple stimuli to present to the subject, in which case the time cost of making trial pictures can be very high and error-prone (as shown in the figure below).

Figure cited in (Wang Fuxing et al., 2015)

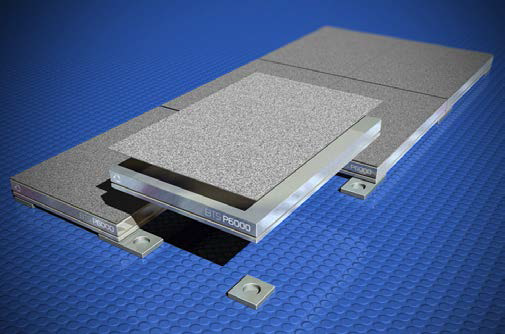

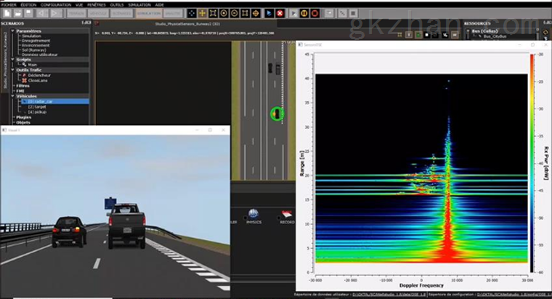

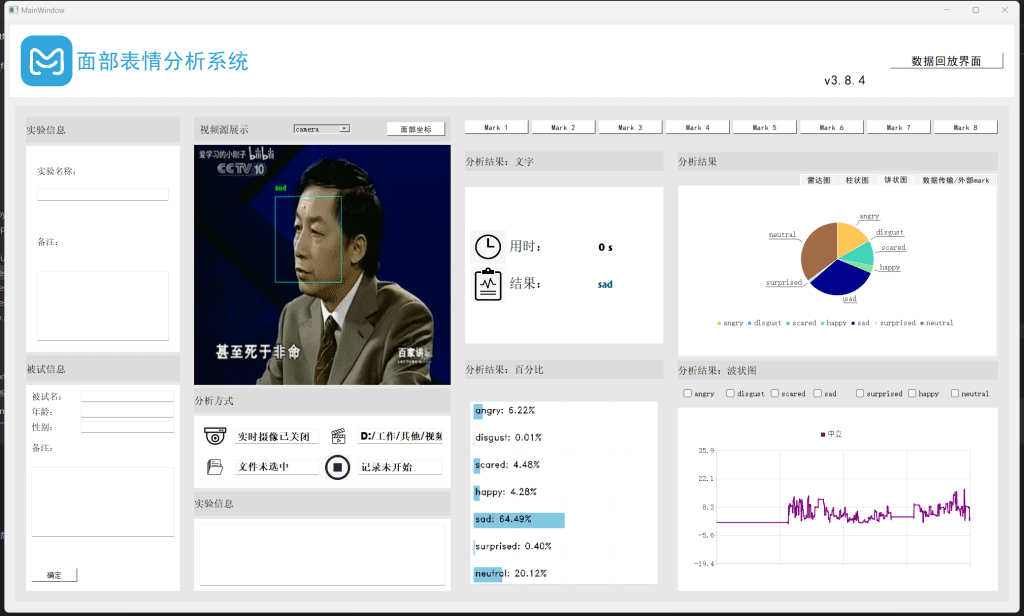

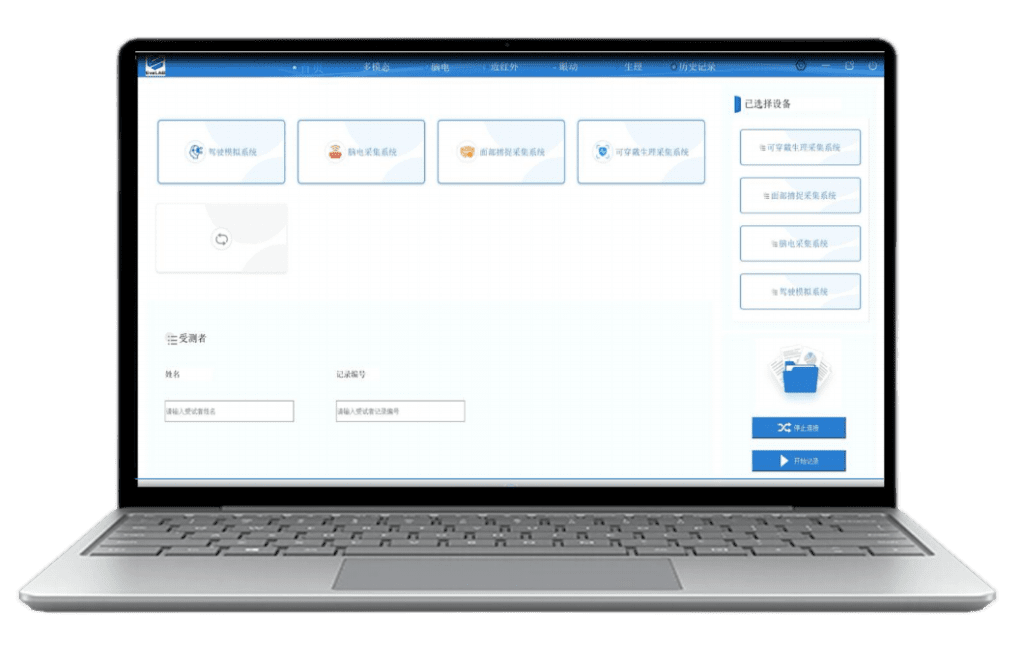

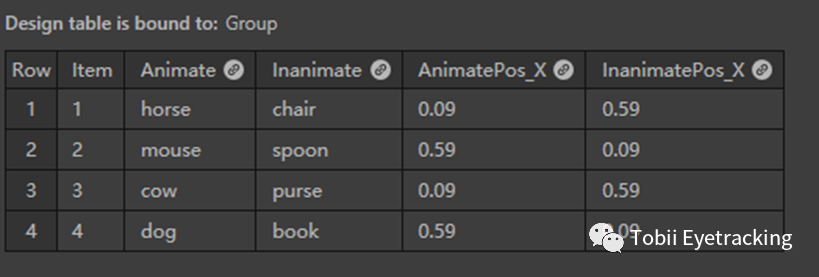

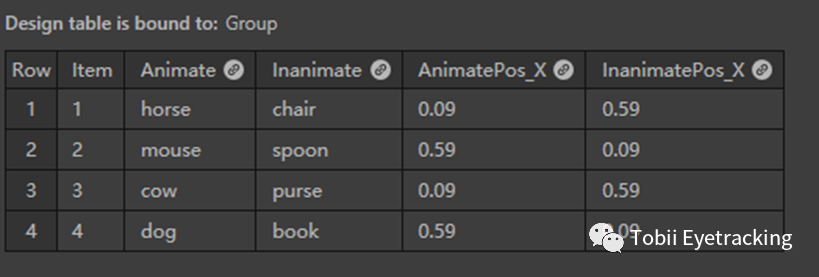

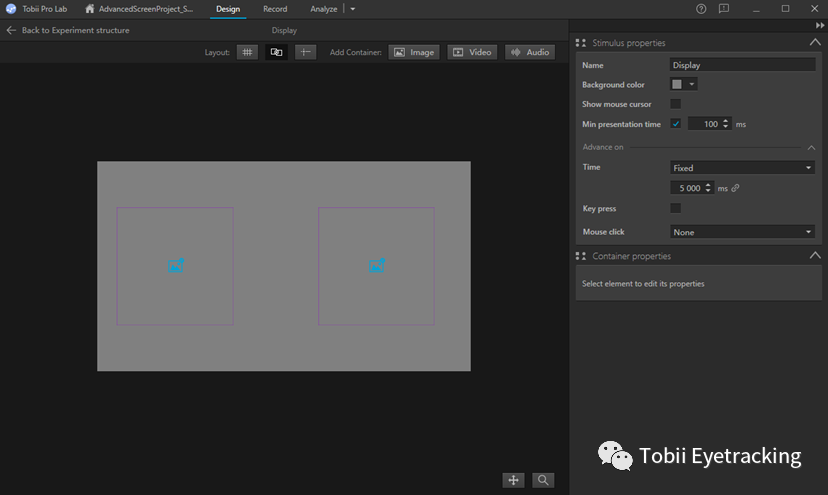

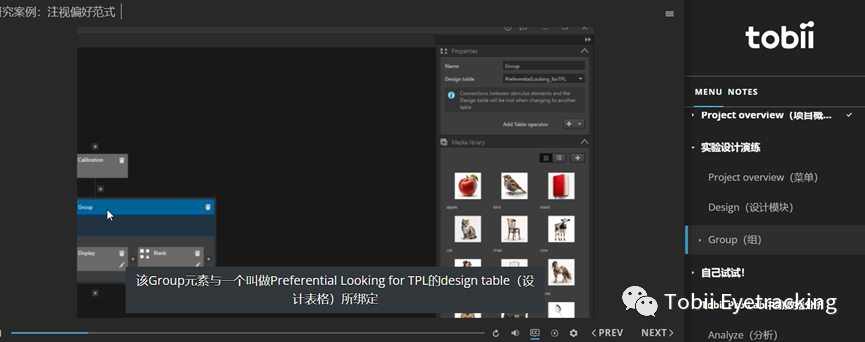

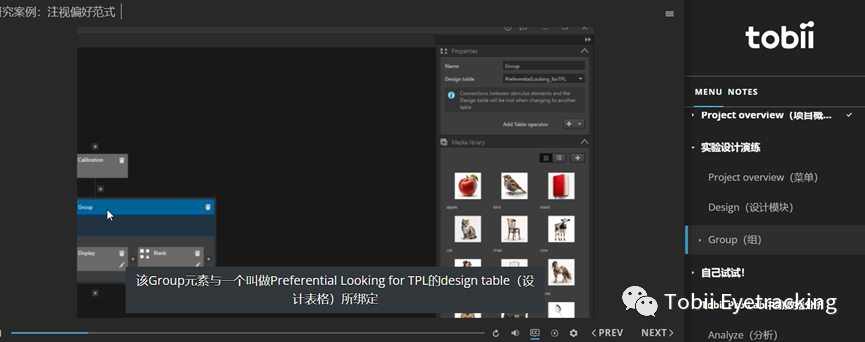

And in Tobii Pro Lab's new experimental project type Advanced Screen Project, this questionIt's good to be able towork out.. This is done by importing all the elements that make up a stimulus into the Media library and using the Design table to specify the location, size, etc. of each stimulus element in each trial. If you have ever used a table-based experimental design tool such as E-prime or psychopy, this approach will be familiar to you!(as shown below)).

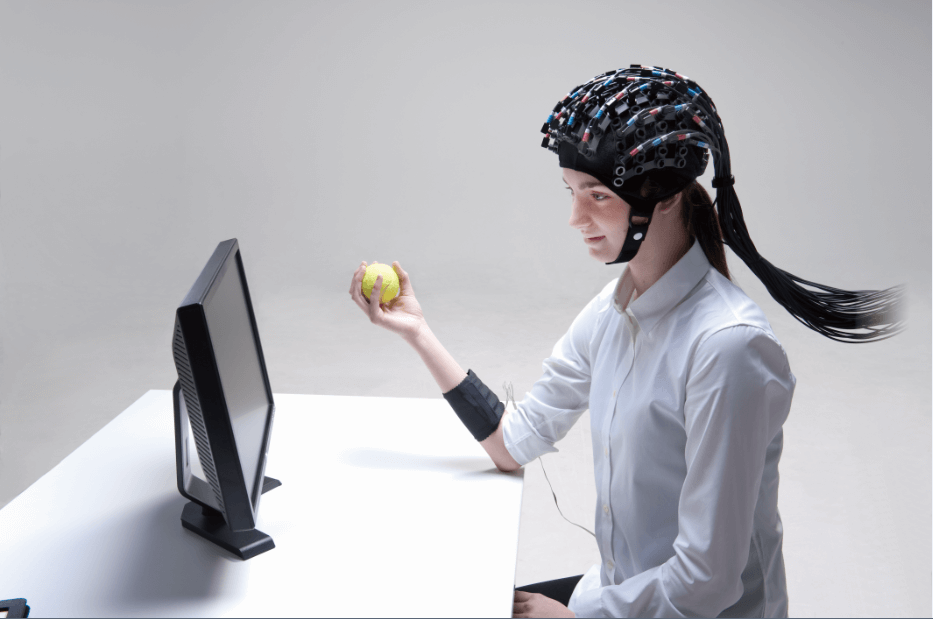

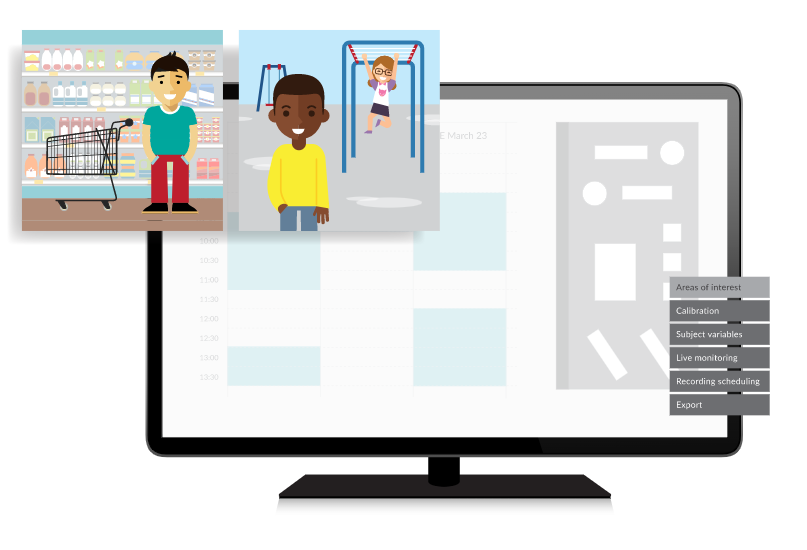

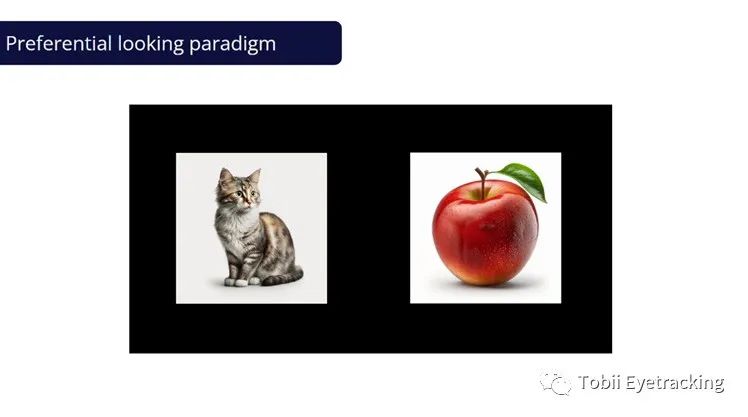

In order to better explain the new features of ASP to you, we will draw on a widely used experimental paradigm, the Preferential looking paradigm. As defined by the APA Dictionary of Psychology, the Preferential looking paradigm is "An experimental method for assessing the perceptual abilities of nonverbal individuals". When a "more interesting" stimulus is presented together with a "less interesting" stimulus, infants will prioritize the "more interesting" stimulus. This is provided that the infant is able to distinguish between the two stimuli. Therefore, this paradigm is often used in developmental psychology to investigate whether infants develop specific cognitive abilities at a particular stage of development.

In the gaze preference paradigm, two visual stimuli are usually presented to the subject simultaneously, while eye-tracking equipment is used to probe where the subject's gaze falls. If the infant gazes for a specific attribute in one category for a greater number of durations/counts compared to another attribute of the stimulus. It can be inferred that the infant is more interested in that particular attribute of stimuli. Thus, it can be further inferred that the infant has developed the ability to recognize both stimulus attributes. In conjunction with cross-sectional research designs commonly used in developmental psychology, the selection of infants as subjects at different developmental periods can effectively infer the critical period for the development of this ability.

To explore the acquisition of Chinese core grammar in preschool children with autism spectrum disorders, Yi Su (2018) proposed a research concept based on the gaze preference paradigm: "Subject children observed two simultaneously presented dynamic video materials, and the auditory stimulus described only one of the events. If the child gazed at the matching event for longer than the non-matching event (compared to baseline), the child was hypothesized to have the ability to comprehend that auditory stimulus."

If a researcher wants to investigate when infants develop the ability to recognize the properties of life in visual stimuli, he or she needs to place animate and inanimate visual stimuli on both sides of the screen while monitoring the infant's eye movement behavior. This time, Tobii provides learning materials based on such a research concept.

Tobii has prepared a wealth of learning materials to help you quickly master this powerful tool for your research. The material includes sample experimental projects prepared in Tobii Pro Lab using ASP, based on the gaze preference paradigm, descriptions, graphical materials used to prepare the experiments, and exported data samples. Researchers can repeat the preparation of experiments based on the materials and samples to familiarize themselves with the various new features of ASP.

Most importantly, we have created an interactive online course for everyone in a format similar to Tobii Academy (Click to jump to Tobii Aacdemy introduction). In this study case, we will show the whole process of constructing experiments in ASP from scratch using the attention preference paradigm as an example, and we have equipped all the tutorials with Chinese interface and subtitles. You can access it by clicking [Read Article] below.

More demonstrations and teaching materials about the new features of Tobii Pro Lab ASP will be released continuously in the following weeks, so stay tuned to Tobii Eyetracking WeChat public number and unlock the valuable value of eye tracking with us.

References

-

Su, Yi (2018). Acquisition of Chinese Core Grammar in Preschool Children with Loneliness Spectrum Disorder. Advances in Psychological Science. Vol. 26, No. 3, 391-399.

-

Wang F. X., Li W. J., Yan C., Duan C. H., Li H.. (2015). Attentional awareness of threatening stimulus snakes in young children: evidence from eye movements. Psychology Daily. Vol. 47, No. 6, 774.

-

"preferential looking technique". APA Dictionary of Psychology. Retrieved 2021-06-10.

This article comes from the WeChat public number: EVERLOYAL